Do you have to watch a new object 1000 times to recognize it? Or if you watch it another 100000 times will you recognize it better? I think not, and this is how image recognition systems based on the Haar cascade classifier or neural networks work.

SaraVision is an image recognition project that is conceptually completely different from the approach that the whole world is pinning their hopes on. It's different from better and better neural networks that learn from bigger and more available databases. We assume that sometimes just one or several glances at an object are enough to remember it and recognize it. It all started from the need to add the sense of sight and some intelligence to one of our sub-projects called SaraEye, which raises the current Google and Alexa assistants to the 2.0 level.

At first, in order to test some basic assumptions we wrote a simple program that recognizes the MNIST character set, which I describe on our blog in a slightly provocative article "About the nonsense of deep learning, neural networks in image recognition (using the MNIST kit)". Already there we managed to create a very universal program, recognizing characters regardless of their size, slant or font type, but it was only a programming "sandbox".

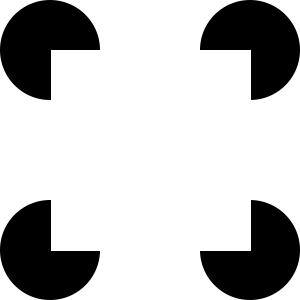

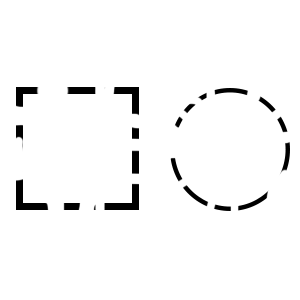

The next stage was to create something more universal, allowing for recognition of any objects, and for a start, allowing for quick detection of basic geometric figures and also to check a theory that our brain can see very well what we cannot see, literally drawing in our imagination the missing elements (see: visual perception and gestaltism, reification theory), and that our system will work similarly:

It worked, it works similarly, as you can see in this amateur video below, and it works so that you don't have to see the whole square to detect that the square is there.

It may seem that detecting simple figures is easy and any programmer can do it. You can use some "ready-made" programs, you can also algorithmize everything, but we don't want to write an algorithm for every shape, but to write one for all shapes, and most importantly we don't want to teach the system with thousands of images.

The next step was to test the system to see if it could handle face detection in the camera video. Importantly, the system is designed to detect a face very quickly, the face can be tilted left or right, slightly sideways, sideways, poorly lit, visible in color or IR rays - it worked. The system, despite the fact that it is under construction coped almost 20 x faster than standard face detection systems, and most importantly coped where other systems could not cope at all (for example, a face illuminated from one side by the sun with the head slightly tilted to the side):

(video taken on a Raspberry Pi 4 microcomputer, use of a single CPU core at 20-30%, image analyzed in real time for obstruction from a moving pan tilt camera that tracks the user's face)

We are at the beginning of the road, but the results we are getting seem sensational, and we are already thinking about a 3D space recognition system based on our method.

Although at the moment we are thinking of applying our method to our other sub-project SaraEye, the possibilities of this system are enormous, the main ones being:

1. We don't teach the system with thousands of samples, just a few or a dozen ones.

2. The angle at which we analyse the recognised image is not important.

3. Up to 20 times higher speed of object recognition.

4. Minimum computer power (Raspberry Pi microcomputer can detect a face in 10 ms, not in 500 ms).

5. No internet connection is needed.

I do not deny any methods of image recognition based on neural networks, the huge progress of these methods especially in recent years, I think that tools like tensorflow are brilliant, but I also think that many things can be done differently, that not everything should be pushed into neural networks, and if we want to use them, let's give them the data on which networks have a chance to work best.